21 Reproducible reports

We’ve seen throughout the course how to combine computer code, data, tabular and graphical results, and natural language writing to explain and communicate our work. Quarto (or R markdown) documents are a great tool for writing reports and ensuring they are reproducible. In this lesson we will examine a few more features of quarto and markdown to help customize your reports, make them easier to revise, and make them more effective data analysis communication tools.

21.1 What is reproducibility and why should I care?

Many people use R as an interactive computer tool. This means they sit at the keyboard, type a series of commands, and keep the results they want. It’s like a very fancy calculator. This is a fantastic way to explore data, learn R, and test out ideas quickly. This work is not reproducible. You would need a recording of everything that was done to reproduce the analysis. If you need to change a small step in your work, you will need to repeat many or all of the steps.

In this course, we have been using R in a slightly more disciplined way, at least for assigned work. For every project, you edit a quarto (.qmd) file, and then you write your computer instructions in code “chunks”, interspersed with English (or any other natural human language) explanations. With Rstudio, you can still use R interactively in this mode, clicking the “play” button on each chunk and seeing the output. When your analysis is done, you can “render” the whole document. This does two important things:

- all the code is executed in order from top to bottom, and

- the results are kept in a new file.

You may have had the experience during the course of preparing your work, trying to render it, and discovering an error. This error is evidence that your quarto document is not complete, so if you rely on it, you will not be able to repeat your steps later on. So the first way quarto aids you in reproducing your work is by giving you an easy way to test if your instructions are complete. If you can render your file today, you can turn off your computer, and come back in a week, and be confident you will be able to reproduce your work then.

We have stressed the value of communication in this course. For some purposes you will just want to communicate by sending someone your report. When you work in a team, communication is not just about the rendered report, but it includes the instructions needed to recreate the report. Quarto is great for this. Most analyses will require more than just one quarto file. They will require data. This is why we have also learned to use R projects, version control, and to share files using GitHub. Now you can write a document to show someone else how to reproduce your work, from gathering data to making a report, and you can be confident that they will be able to make all the steps work and even add to your work in a time-efficient way.

21.2 What are barriers to reproducibility and how I can overcome them?

21.2.1 File paths

If you have a complex project, you will have multiple directories (or folders), and possibly many data files. When reading a data file, you will need to refer to the file by name. Make sure you never use the way files are organized on your computer as part of the script. In particular never write anything like:

my_data <- read_csv("/Users/airwin/Documents/Stats-project/data/my_data.csv")because there is no way that will work on someone else’s computer! If you reorganize your Documents folder, it won’t even work on your computer! What should you do instead?

We have been using R packages and organizing all files for a project in a folder (and sub-folders) created just for that project. This is a good first step. The here package provides a useful function that allows you refer to a file relative to the directory where your .Rproj file is stored. This is important to make your R code work on someone else’s computer. Here’s how you use it:

my_data <- read_csv(here("data", "annual-number-of-deaths-by-cause.csv"))This tells R to find a folder called data in your project, then look for a file called annual-number-of-deaths-by-cause.csv in that folder. If this code works on your computer, with your R project, and you give the whole folder to a collaborator, you don’t need to worry how they organize their files.

Sometimes you will think a dataset is too large to put in your project folder. Or the data may be used by multiple projects, and you don’t want to have multiple copies. What should you do? The best options are: put the common data files in a GitHub repository (public or private), deposit the data in an online repository such as osf.io, or create an R data package of your own to manage the data on your computer and the computers of your collaborators. The R data package can be pubic, available to anyone, or you can keep it completely private on your own computer. All of these methods have the advantage of separating the data collection and validating process from the analysis and visualization process. Which method you prefer will depend on many factors including the size of the data, how often it changes, whether it is public, or if you are allowed to redistribute it.

21.2.2 Finding and installing R packages

R packages can be obtained from several sources. The most common sources are CRAN, Bioconductor, and GitHub. The most widely used packages are on CRAN and they are checked regularly to be sure they still work. Anyone can distribute a package on GitHub and make it available, but these packages are missing a level of quality control. We have not used Bioconductor in this course; it is a CRAN-like repository focused on bioinformatics computations.

Installing a new package from CRAN is easy. If you put the appropriate library function call in your R markdown document, but you are missing the package, Rstudio will offer to download it for you. If the package is on GitHub, Rstudio won’t be able to help. The usual process is to search for the package on Google, then install it. You will help yourself and your colleagues if you write the installation command next to your library function call, but preface it with a comment character # to stop the code from being executed. (There is an example at the top of the source for this file: you can find the source for all these notes on GitHub.)

21.3 Make your report look good

An easy to read report is a better report. Formatting will not turn a bad report into a good one, but good formatting can help make good results easier to digest.

Here are some specific recommendations for formatting your report:

- Use headings (lines starting with one or more

#.) Always put a blank line before and after a heading row. - Use bulleted or numbered lists (start a sequence of lines with a

*or1.) when appropriate. - Format your code nicely. Use a style guide. Or use an automatic code formatter such as styler (see below). Leave a blank line before and after your code chunks.

- Format your output nicely. This includes tables and figures. (See below.)

- Knit your report, read the formatted version, revise the markdown source, and repeat.

For examples of these formatting tips, see the source for this file.

Headings make your markdown document easier to navigate too. Look for the “show outline” button in the upper right of Rstudio’s editing pane. For your homework assignments, I have set the quarto documents up to turn these headings into a table of contents on the rendered document.

21.4 Code formatting

There is a tidyverse style guide. Google has their own, revised from the tidyverse guide. It’s worthwhile reading these guides. Code that is formatted in a standard way is easier for you to read and easier for someone else to read.

Here is some carelessly formatted code.

Here is the same code (and its output) formatted using styler:

If you have loaded the styler package, you can use the Addins menu to style a selection of code. Highlight some code and try it out!

What makes code easier to read? Consistency, indentation when an idea continues from one line to the next, and keeping lines short. Consistency in spacing, use of upper and lower case, and naming of variables is valuable. I suggest you write neat and easy to read code in your R markdown documents. Don’t use automatic tidying as an excuse to make messy code.

21.5 Customization of result reporting in quarto

The first line of a code chunk can be as simple as {r} but you can also include many options between the braces. The markdown book describes the options available. Here I will demonstrate a few chunk options related to figures and formatting output.

Knitting a document usually stops when an error is encountered. This is a safety measure to alert you to a document which is not reproducible because of errors. On rare occasions you may want to use the chunk option error=TRUE to allow error messages to appear in knitted output and not stop the knitting process.

1 + "A"Error in 1 + "A": non-numeric argument to binary operatorYou can output graphics in multiple file formats by adding dev = c("png", "pdf") (and other formats) to the chunk options. These files will be deleted unless you reqest they be kept, which is easily done by caching the results of at least one code chunk.

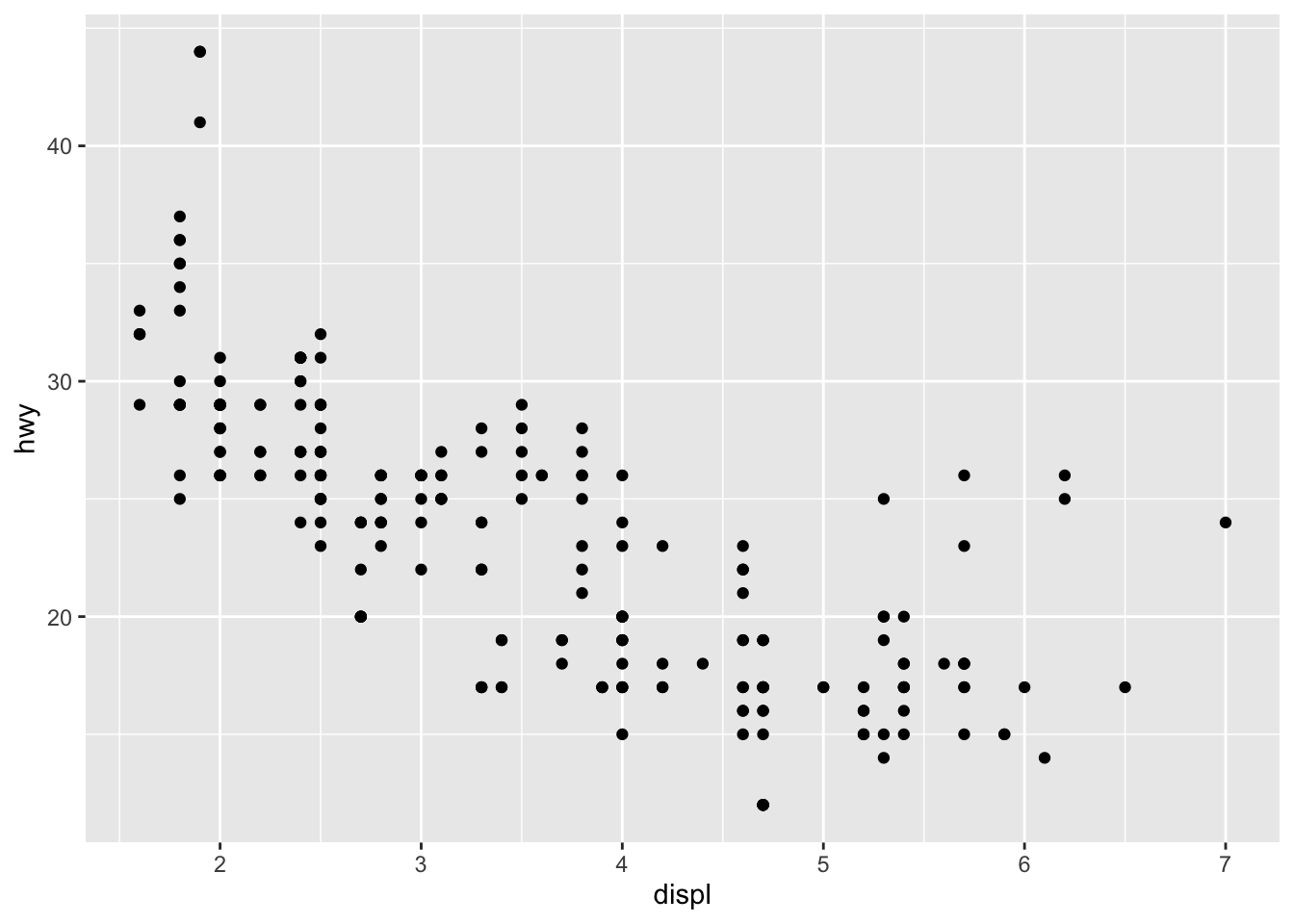

mpg |> ggplot(aes(displ, hwy)) + geom_point()

You can use chunk options to control whether the knitted document includes:

- code (

echo=FALSEto hide) - results (

results='hide') - messages (

message=FALSEto hide) - warnings (

warning=FALSEto hide) - plots (

fig.show='hide') - everything (

include=FALSE)

You can also stop the code from being evaluated by setting eval=FALSE.

If you have multiple lines of code with output in your chunk, the knitted document will contain several blocks of code and output with space between the blocks. These blocks can be combined into one by setting collapse=TRUE.

R code that is formatted in standardized way is easier for others to read. You can get your code automatically reformatted using tidy=TRUE and the formatR package. The styler package is another approach to automatic reformatting of R code. Use tidy='styler' in the code chunk options.

You can change the size of a figure using fig.width = 6 and fig.height = 4 where 6 and 4 are lengths in inches. You can also use out.width="85%" to set the width of the figure as a proportion of the document width. You can center a figure horizontally using fig.align='center'.

You can use R variables and code in the chunk options.

21.6 Documenting which packages you use

If you use R for long enough and with enough other people, you will discover that R packages get revised and don’t always work the same way as they used to. This can be a major impediment to reproducibility. The simplest solution to this problem is to document the R packages you use in your analysis by adding a line of code to the end of your report that lists the packages and their version numbers in use. By looking at your knitted output, a user having trouble (possibly you in the future!) can look to see which packages have changed.

R version 4.5.2 (2025-10-31)

Platform: aarch64-apple-darwin20

Running under: macOS Sequoia 15.6

Matrix products: default

BLAS: /System/Library/Frameworks/Accelerate.framework/Versions/A/Frameworks/vecLib.framework/Versions/A/libBLAS.dylib

LAPACK: /Library/Frameworks/R.framework/Versions/4.5-arm64/Resources/lib/libRlapack.dylib; LAPACK version 3.12.1

locale:

[1] en_US.UTF-8/en_US.UTF-8/en_US.UTF-8/C/en_US.UTF-8/en_US.UTF-8

time zone: America/Halifax

tzcode source: internal

attached base packages:

[1] stats graphics grDevices utils datasets methods base

other attached packages:

[1] styler_1.11.0 gapminder_1.0.1 here_1.0.2 report_0.6.2

[5] lubridate_1.9.4 forcats_1.0.1 stringr_1.6.0 dplyr_1.1.4

[9] purrr_1.2.0 readr_2.1.6 tidyr_1.3.1 tibble_3.3.0

[13] ggplot2_4.0.1 tidyverse_2.0.0

loaded via a namespace (and not attached):

[1] gtable_0.3.6 jsonlite_2.0.0 compiler_4.5.2 tidyselect_1.2.1

[5] scales_1.4.0 fastmap_1.2.0 R6_2.6.1 labeling_0.4.3

[9] generics_0.1.4 knitr_1.50 htmlwidgets_1.6.4 rprojroot_2.1.1

[13] insight_1.4.4 R.cache_0.17.0 pillar_1.11.1 RColorBrewer_1.1-3

[17] tzdb_0.5.0 R.utils_2.13.0 rlang_1.1.6 stringi_1.8.7

[21] xfun_0.55 S7_0.2.1 timechange_0.3.0 cli_3.6.5

[25] withr_3.0.2 magrittr_2.0.4 digest_0.6.38 grid_4.5.2

[29] rstudioapi_0.17.1 hms_1.1.4 lifecycle_1.0.4 R.oo_1.27.1

[33] R.methodsS3_1.8.2 vctrs_0.6.5 evaluate_1.0.5 glue_1.8.0

[37] farver_2.1.2 rmarkdown_2.30 tools_4.5.2 pkgconfig_2.0.3

[41] htmltools_0.5.8.1 If you want to produce bibliographic citations for your packages you can use the report package:

report::cite_packages() - Bryan J (2025). gapminder: Data from Gapminder. doi:10.32614/CRAN.package.gapminder https://doi.org/10.32614/CRAN.package.gapminder, R package version 1.0.1, https://CRAN.R-project.org/package=gapminder.

- Grolemund G, Wickham H (2011). “Dates and Times Made Easy with lubridate.” Journal of Statistical Software, 40(3), 1-25. https://www.jstatsoft.org/v40/i03/.

- Makowski D, Lüdecke D, Patil I, Thériault R, Ben-Shachar M, Wiernik B (2023). “Automated Results Reporting as a Practical Tool to Improve Reproducibility and Methodological Best Practices Adoption.” CRAN. doi:10.32614/CRAN.package.report https://doi.org/10.32614/CRAN.package.report, https://easystats.github.io/report/.

- Müller K (2025). here: A Simpler Way to Find Your Files. doi:10.32614/CRAN.package.here https://doi.org/10.32614/CRAN.package.here, R package version 1.0.2, https://CRAN.R-project.org/package=here.

- Müller K, Walthert L, Patil I (2025). styler: Non-Invasive Pretty Printing of R Code. doi:10.32614/CRAN.package.styler https://doi.org/10.32614/CRAN.package.styler, R package version 1.11.0, https://CRAN.R-project.org/package=styler.

- Müller K, Wickham H (2025). tibble: Simple Data Frames. doi:10.32614/CRAN.package.tibble https://doi.org/10.32614/CRAN.package.tibble, R package version 3.3.0, https://CRAN.R-project.org/package=tibble.

- R Core Team (2025). R: A Language and Environment for Statistical Computing. R Foundation for Statistical Computing, Vienna, Austria. https://www.R-project.org/.

- Wickham H (2016). ggplot2: Elegant Graphics for Data Analysis. Springer-Verlag New York. ISBN 978-3-319-24277-4, https://ggplot2.tidyverse.org.

- Wickham H (2025). forcats: Tools for Working with Categorical Variables (Factors). doi:10.32614/CRAN.package.forcats https://doi.org/10.32614/CRAN.package.forcats, R package version 1.0.1, https://CRAN.R-project.org/package=forcats.

- Wickham H (2025). stringr: Simple, Consistent Wrappers for Common String Operations. doi:10.32614/CRAN.package.stringr https://doi.org/10.32614/CRAN.package.stringr, R package version 1.6.0, https://CRAN.R-project.org/package=stringr.

- Wickham H, Averick M, Bryan J, Chang W, McGowan LD, François R, Grolemund G, Hayes A, Henry L, Hester J, Kuhn M, Pedersen TL, Miller E, Bache SM, Müller K, Ooms J, Robinson D, Seidel DP, Spinu V, Takahashi K, Vaughan D, Wilke C, Woo K, Yutani H (2019). “Welcome to the tidyverse.” Journal of Open Source Software, 4(43), 1686. doi:10.21105/joss.01686 https://doi.org/10.21105/joss.01686.

- Wickham H, François R, Henry L, Müller K, Vaughan D (2023). dplyr: A Grammar of Data Manipulation. doi:10.32614/CRAN.package.dplyr https://doi.org/10.32614/CRAN.package.dplyr, R package version 1.1.4, https://CRAN.R-project.org/package=dplyr.

- Wickham H, Henry L (2025). purrr: Functional Programming Tools. doi:10.32614/CRAN.package.purrr https://doi.org/10.32614/CRAN.package.purrr, R package version 1.2.0, https://CRAN.R-project.org/package=purrr.

- Wickham H, Hester J, Bryan J (2025). readr: Read Rectangular Text Data. doi:10.32614/CRAN.package.readr https://doi.org/10.32614/CRAN.package.readr, R package version 2.1.6, https://CRAN.R-project.org/package=readr.

- Wickham H, Vaughan D, Girlich M (2024). tidyr: Tidy Messy Data. doi:10.32614/CRAN.package.tidyr https://doi.org/10.32614/CRAN.package.tidyr, R package version 1.3.1, https://CRAN.R-project.org/package=tidyr.

# See also report::report(sessionInfo())To absolutely guarantee you can use R code in the future, some applications will benefit from you keeping copies of all the required packages on your own computer system. The packrat and checkpoint packages can help you manage packages. I have never felt the need to have this level of reproducibility, but I find it reassuring to know these tools exist.

21.7 Using other languages

Quarto allows you to combine several different programming languages, including python and Julia in the same document. For more information see the Quarto website.

Related to the topic of inter-operation of computing languages, but departing from the topic of R markdown, you can also write C++ code in an R session and execute the compiled code directly from R. This is very valuable if you have a calculation that is slow in R, but would be faster in a compiled language like C.

SQL is a language for describing queries to a widely used style of database. R contains tools for interacting with SQL databases, but it can also generate SQL code from dplyr functions. Here’s an example showing how R can generate SQL queries from dplyr calculations.

library(dbplyr)

library(RSQLite)

con <- DBI::dbConnect(RSQLite::SQLite(), dbname = ":memory:")

copy_to(con, palmerpenguins::penguins, "penguins")

penguins <- tbl(con, "penguins")

penguins_aggr <-

penguins |>

group_by(species) |>

summarize(

N = n(),

across(ends_with("mm"), sum, .names = "TOT_{.col}"),

across(ends_with("mm"), mean, .names = "AVG_{.col}"),

)

penguins_aggr# Source: SQL [?? x 8]

# Database: sqlite 3.51.1 [:memory:]

species N TOT_bill_length_mm TOT_bill_depth_mm TOT_flipper_length_mm

<chr> <int> <dbl> <dbl> <int>

1 Adelie 152 5858. 2770. 28683

2 Chinstrap 68 3321. 1253. 13316

3 Gentoo 124 5843. 1843. 26714

# ℹ 3 more variables: AVG_bill_length_mm <dbl>, AVG_bill_depth_mm <dbl>,

# AVG_flipper_length_mm <dbl>capture.output(show_query(penguins_aggr)) [1] "<SQL>"

[2] "SELECT"

[3] " `species`,"

[4] " COUNT(*) AS `N`,"

[5] " SUM(`bill_length_mm`) AS `TOT_bill_length_mm`,"

[6] " SUM(`bill_depth_mm`) AS `TOT_bill_depth_mm`,"

[7] " SUM(`flipper_length_mm`) AS `TOT_flipper_length_mm`,"

[8] " AVG(`bill_length_mm`) AS `AVG_bill_length_mm`,"

[9] " AVG(`bill_depth_mm`) AS `AVG_bill_depth_mm`,"

[10] " AVG(`flipper_length_mm`) AS `AVG_flipper_length_mm`"

[11] "FROM `penguins`"

[12] "GROUP BY `species`" rm(con)What does using other tools have to do with making your work reproducible? Quarto is a flexible tool that lets you use more than just R, so if your workflow contains steps external to R, they can sometimes still be included in your report.

21.8 Packages used

In addition to tidyverse and gapminder, code in this lesson uses the packages

-

reportwhich is availbale on github and can be installed usingremotes::install_github("easystats/report")for thecite_packagesfunction, -

hereto help find files in a project, even when you move the project to a new computer, -

stylerfor formatting R code, -

dbplyrandRSQLitefor working with databases.

21.9 Further reading

- Repository of these course notes for ideas on formatting and preparing R markdown documents

- Project workflow from R4DS

- Generating SQL with dbplyr

- Peng. Reproducible research in computational science. (Peng 2011)

- LeVeque et al. Reproducible research for scientific computing (LeVeque, Mitchell, and Stodden 2012)