19 Finding and accessing data

Data are available from a huge number of sites on the internet run by governments, NGOs, companies, industry associations, research projects, and individuals. These data come in a variety of formats and while many will be easy to read with R, some will be quite challenging. In this lesson I will introduce you to some sources of data. Partly this is to help you start to learn how to navigate a variety of data sources, but of course part of the reason is to get you thinking about data you might use in your project. For more information on the project see the description on the evaluation page.

19.1 Finding data

19.1.1 Data in R packages

Many datasets are available as part of R packages. These are the easiest to use, but they are often small and designed for demonstration purposes. For example, the gapminder package only contains a small portion of the data available on the gapminder website.

These are the go-to datasets that we have used for demonstrating many simple visualizations:

- mtcars and many other well-known data in datasets package

- penguins in palmerpenguins package

- gapminder in gapminder package (but see website too Gapminder)

- diamonds, mpg, economics, midwest, msleep in ggplot2 package

- nycflights13 in dbplyr package

- gss_sm, gss_cat, gss_sib, gss_lon and gss_lon in socviz package

The function datasets in the package datasets.load will display a list of all datasets in R packages you have installed on your computer. There is not much information about each dataset, but you once you know their names, you can read the help page for each dataset.

19.1.2 Tidy Tuesday

Tidy Tuesday is a project to encourage people to develop skills at data visualization and analysis using the tidyverse packages in R. Each week a new data set is posted and participants experiment to create new visualzations. Some people share their work on GitHub and social media.

19.1.3 R packages for accessing data

There are many packages designed primarily to provide access to large collections of data. Here are a few examples.

- OECD for data from the OECD

- cansim for data from Statistics Canada

- cancensus for data from the Canadian census and National household survey. You need to create an account and get an API key to use this package. The package documentation shows you how.

19.1.4 Websites with data collections

Naturally there are websites that curate lists of data available from other sites. Here are a few I’ve found useful.

- Our World In Data is a curated set of over 3000 charts with documentation and open source data.

- The awesome public datasets project collects high quality public data, organized thematically.

- This is one person’s collection of data of various sources

- r-dir has a list of freely available datasets

- World Bank economic development indicators

- CORGIS the collection of really great, interesting, situated datasets

19.2 Examples of accessing data

19.2.1 Gapminder

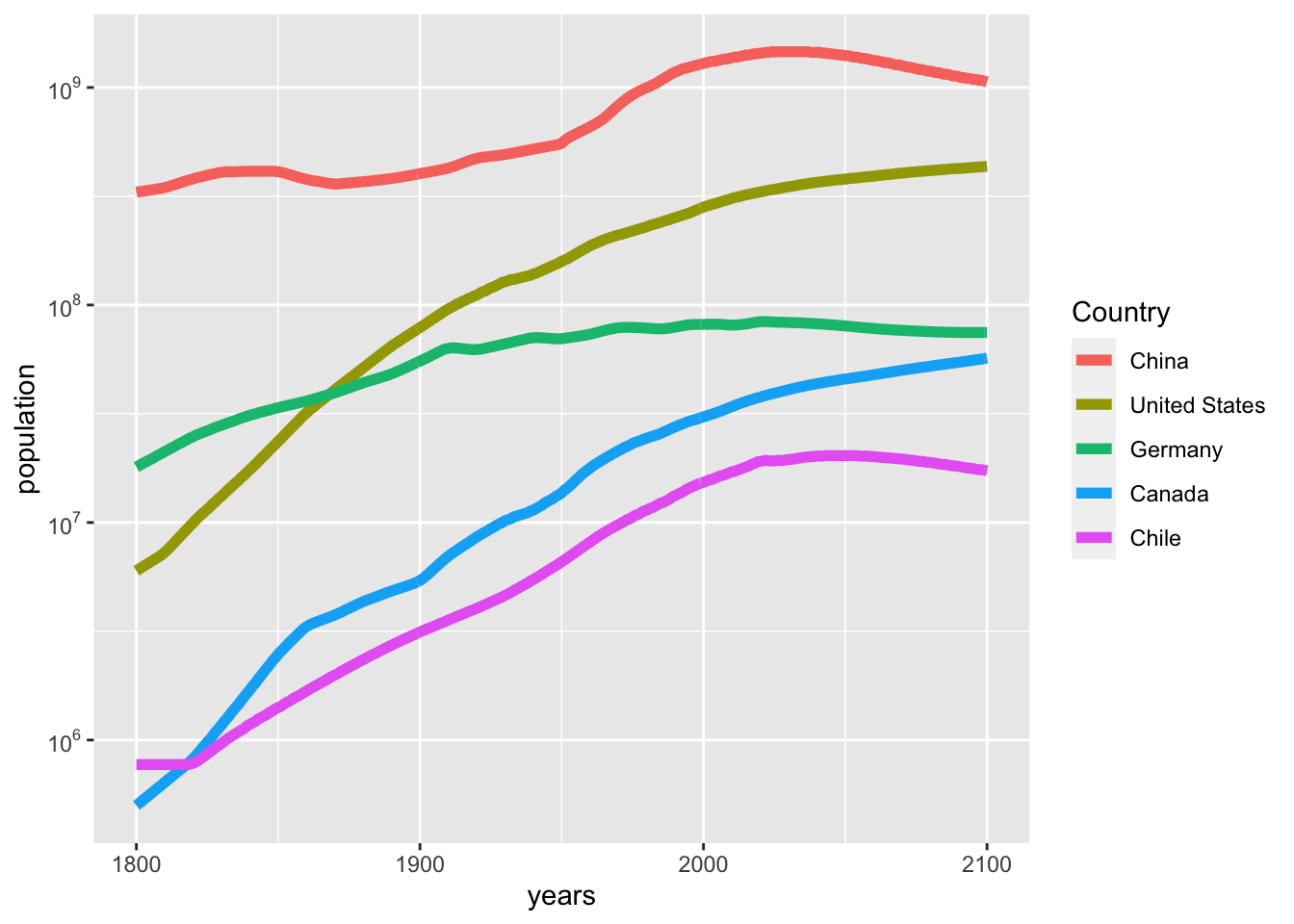

Gapminder distributes many datasets, some of their own, and some collected by other organizations that they are redistributing. They have a webpage to help you search, browse and access the data. Using this webpage I have found data on population of countries by years from 1800-2100 (with many missing data, some interpolated data, and of course many years of projections). Here is some R code to read and work with this data. There are data on 195 countries over 302 years. I have selected five countries to make a simple plot.

pop <- read_csv("static/population_total.csv")##

## ── Column specification ────────────────────────────────────────────────────────

## cols(

## .default = col_double(),

## country = col_character()

## )

## ℹ Use `spec()` for the full column specifications.

pop %>% filter(country %in% c("Canada", "China", "Chile", "Germany", "United States")) %>%

pivot_longer(`1800`:`2100`, names_to = "years", values_to = "population") %>%

mutate(years = as.numeric(years)) %>%

ggplot(aes(x = years,

y = population,

color = fct_reorder(country, population, max, .desc=TRUE))) +

geom_line(size=2) +

scale_y_log10(labels = trans_format("log10", math_format(10^.x))) +

labs(color = "Country")

19.2.2 Consumer price index in Canada

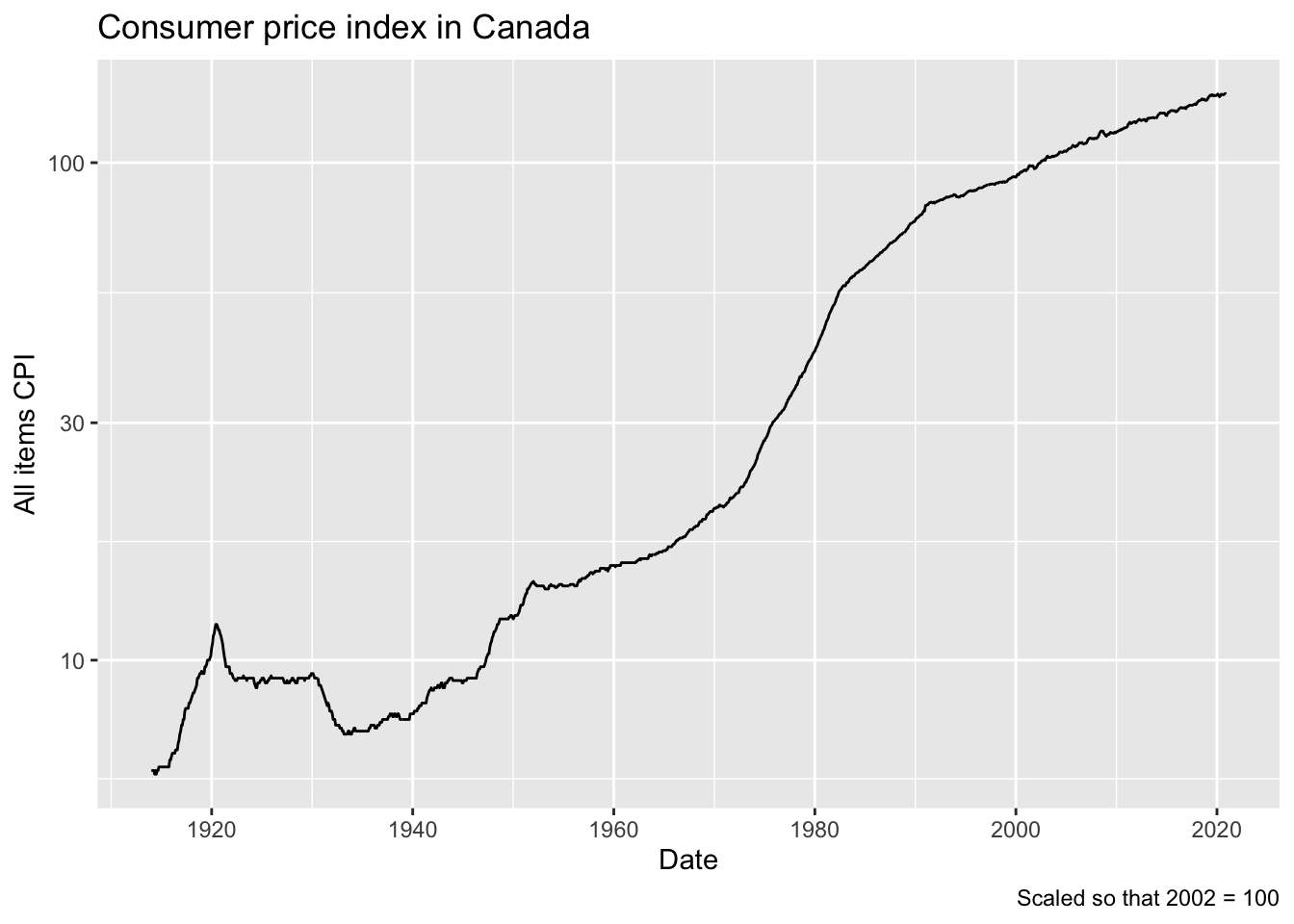

One measure of inflation is its effect on prices. Here is a table from Statistics Canada that reports over 1 million rows of data on this measure. Statistics Canada tables are an extreme version of “long” data, which usually require a lot of filtering to get just the rows you want. You will want to make use of dpylr functions summarize and count to study the structure of the data.

# Population and projection in a huge table with 2 million rows, 300 MB of data

# cansim::get_cansim("17100057")

cpi <- cansim::get_cansim("18-10-0004-01")## Accessing CANSIM NDM product 18-10-0004 from Statistics Canada## Parsing data## Folding in metadata

cpi %>% filter(`Products and product groups` == "All-items") %>%

count(GEO)## # A tibble: 30 x 2

## GEO n

## <chr> <int>

## 1 Alberta 508

## 2 British Columbia 508

## 3 Calgary, Alberta 600

## 4 Canada 1284

## 5 Charlottetown and Summerside, Prince Edward Island 564

## 6 Edmonton, Alberta 600

## 7 Halifax, Nova Scotia 600

## 8 Iqaluit, Nunavut 217

## 9 Manitoba 508

## 10 Montréal, Quebec 600

## # … with 20 more rowsLet’s look at these data for Canada as a whole.

cpi %>% filter(`Products and product groups` == "All-items",

GEO == "Canada") %>%

select(REF_DATE, VALUE) %>%

mutate(date = lubridate::ym(REF_DATE)) %>%

ggplot(aes(x = date, y = VALUE)) +

geom_line() +

labs(x = "Date", y = "All items CPI",

title = "Consumer price index in Canada",

caption = "Scaled so that 2002 = 100") +

scale_y_log10() ### Causes of death worldwide

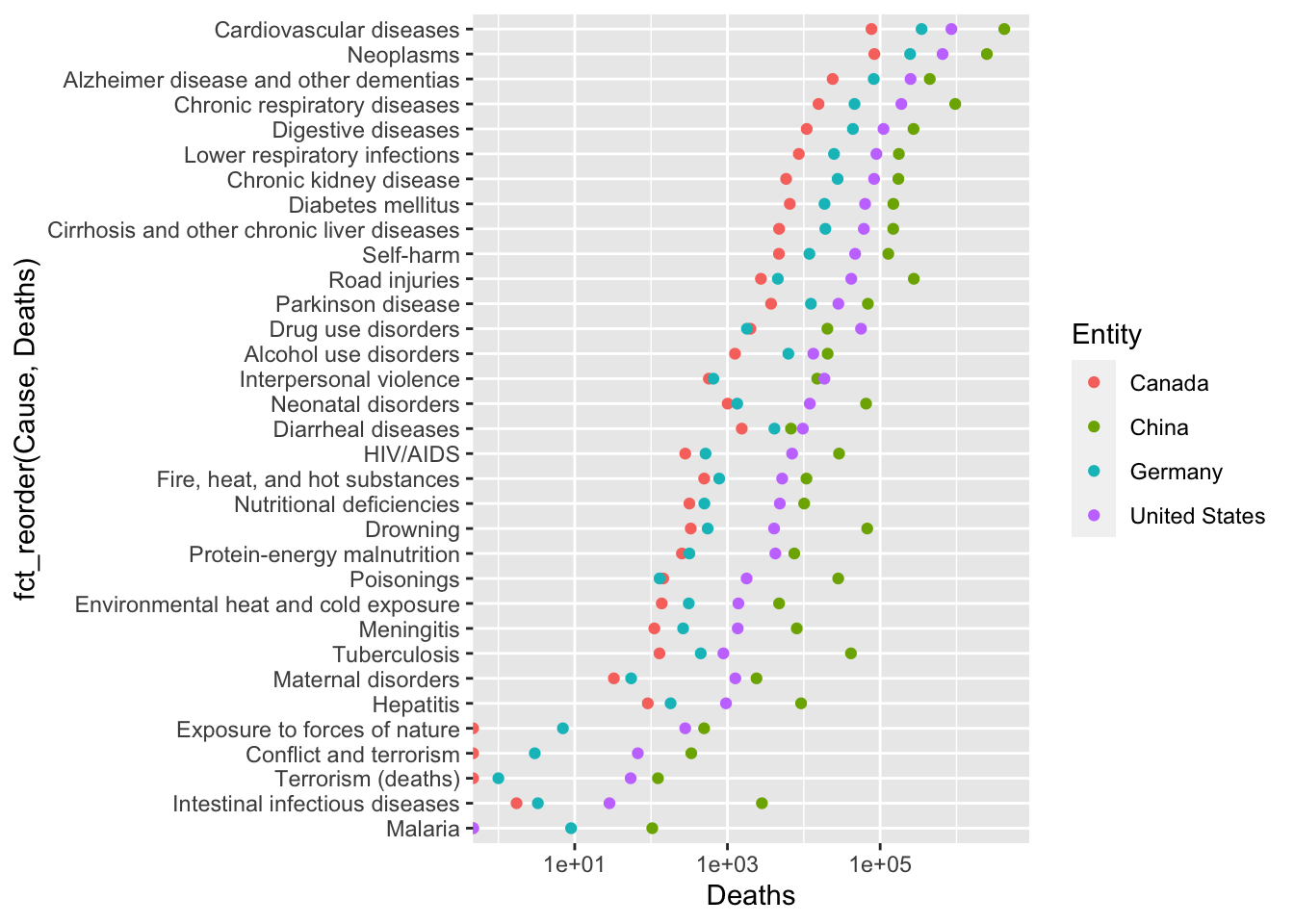

### Causes of death worldwideOur world in data has many compliations of data across many countries. Here is a table listing causes of death over time and countries. There is a link to a csv file on that page that can be easily read with R. As is often the case, this is a large table (nearly 7000 observations of 37 variables). You will need to explore the data a bit to understand it. Here is one simple plot that can be made.

death <- read_csv("static/annual-number-of-deaths-by-cause.csv", guess_max = 10000)##

## ── Column specification ────────────────────────────────────────────────────────

## cols(

## .default = col_double(),

## Entity = col_character(),

## Code = col_character(),

## `Number of executions (Amnesty International)` = col_character()

## )

## ℹ Use `spec()` for the full column specifications.

death %>% filter(Entity %in% c("Canada", "China", "Germany", "United States"),

Year == 2015) %>%

select(Entity, `Deaths - Road injuries - Sex: Both - Age: All Ages (Number)`:`Terrorism (deaths)`) %>%

pivot_longer(`Deaths - Road injuries - Sex: Both - Age: All Ages (Number)`:`Terrorism (deaths)`,

names_to = "Cause", values_to = "Deaths") %>%

mutate(Cause = str_remove(Cause, "Deaths - ") %>%

str_remove(" - Sex: Both - Age: All Ages \\(Number\\)")) %>%

ggplot(aes(x = Deaths, y = fct_reorder(Cause, Deaths),

color = Entity)) +

geom_point() +

scale_x_log10()## Warning: Transformation introduced infinite values in continuous x-axis

Of course, it would probably be more interesting to plot these as per capita deaths; or at least, that would enable a different sort of comparison.

19.4 Describing data

Once you have done work to find data, you will also want to do some research to learn the “5 Ws” of data. In addition to what the variables are and what each observation represents, you will want to know who collected it, when, why, and how. It’s a good idea to write a “readme” to summarize what you learn. For your term project you will be asked to provide some information on the datasets you analyze, but you should also be aware that there are online guides that provide advice on documenting data.

19.5 Distribution of data

If you want to distribute an analysis of data or redistribute the original data, please be sure to respect the terms of use of the data. Many people encourage the use of FAIR data usage principles.

19.6 Further reading

- Importing data from R4DS

- Roger Peng’s notes on importing data

- An older but comprehensive guide to importing data